Eggbots and Inverse Panoramas

Projects with the Raspberry Pi.

These generically are computer controlled drawing machines. Give them a Cartesian, flat, 2D drawing and they draw it onto objects with an axis of cylindrical symmetry, such as ping-pong balls, eggs, light bulbs and wine glasses. Basically x/y coordinates are re-purposed as latitude/longitude. I got 'into' eggbots some years ago. They are a fantastic introduction to CAM, and can be made easily from stepper motors stripped out of old printers, scanners et al. Plus hot-melt glue, BluTak, etc! In the 'old days' we used parallel ports ( remember them- LPT1, etc) and made motor drivers via software logic and discrete transistors.

These are the inverse. Take an object with cylindrical symmetry and from it create a 2D flat 'un-wrapped' view. I thought this up as a way to create photographic-quality records of an antique vase our family has- but in the event it is too valuable to 'play' with, hence this Poole pottery jug! There are many objects, like vases or jugs, which have decoration all round their surface. A photo can only show one direction, and the rest is either foreshortened and distorted, or the back aand sides are invisible.

-

-

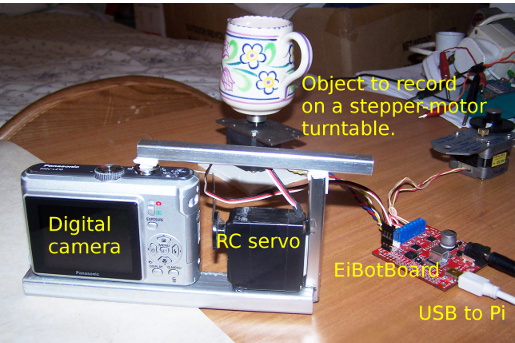

By mounting the object on a stepper-motor turntable, it is easy to get the rotate-and-step sequence. I'm still experimenting whether it is better to use a webcam or digital camera. Things will change once the Pi camera is released. At present I use an external camera since I have only lo-res webcams available, and use a servo to operate it. This means using an SD card to transfer the images.

I can automate, via ImageMagick, the merging of the images. At present it is easy to do the cropping and joining. Just needs choosing suitable strip size for a given object. Any necessary scaling and colour /contrast adjustment could also be automated. It may be a better idea to feather the edges and overlap them a bit.

After I'd got this working I discovered others have played with this, particularly in the context of recording say a human head from every direction, to be used for texture-mapping onto a 3D model of a head. But then it's very difficult to invent something really new!

It is incredibly easy to drive eggbots, since a free add-on to Inkscape, the Linux vector drawing package, sends its output to the two stepper motors and pen-control servo. You just download an extra file and unzip into a particular directory.

WARNING. On my ( early) PI Inkscape takes AGES to load and render. But it happily runs an eggbot as fast as my mechanics allow...

I do however often use my main PC instead, and I also drive the eggbots and other machines in Liberty BASIC or Python.

You require only a single interface board called an EiBotBoard, available from SparkFun for about 50 dollars ( and from SK Pang and other agents) which plugs in to a USB port, and is seen by Linux as a serial port. It has two microstepping stepper-drivers and umpteen digital I/O and servo outputs. You just need a motor power supply for the motors- I use a 12V 1A power brick. The board logic and servo draw power via the usb.

Now you can first test the setup with a serial terminal such as Minicom. Typical commands are

Once you are happy the motors do what they should, you can write in Python code like this ( as used for the inverse panorama photos)..

ImageMagic is great for processing images- I resized a sequence of camera shots into the animated GIF with just

For the 'inverse' set-up, I simply wrote short Python scripts to rotate camera or object and to trigger the pictures. What I REALLY want ( real soon now???) is the Pi camera unit. I especially want to do some y/t photos as used in athletic track and horse racing.

sm,5000,0,1536

which says take 5000ms & move pen motor by 1536 steps.

or

tp

which toggles the pen servo between preset up and down positions.

#!/usr/bin/env python

import time

from time import sleep

import serial

ser = serial.Serial( "/dev/ttyACM0", 115200)

# check EBB & version number

ser.write( 'v' +"\r\n")

time.sleep( 1)

print ser.readline()

while 1:

print "Rotate & take photo.."

print "Step motor #1 120 steps, taking 1 seconds, then wait 1s more."

# 1000ms, motor 1 120 steps, motor 2 none.

ser.write( "sm,1000,120,0\r\n")

s = ser.readline()

#print s

time.sleep( 2)

print "Pen servo cw on pin 4. Delay 2 second."

# channel 1, duration in 12-millionths of a second, pin 4, not at slowed rate

ser.write( "tp\r\n")

s = ser.readline()

#print s

time.sleep( 2)

print "Pen servo acw on pin 4. Delay 2 second."

ser.write( "tp\r\n")

s = ser.readline()

#print s

time.sleep( 2)

print ""

Using Inkscape to run an eggbot

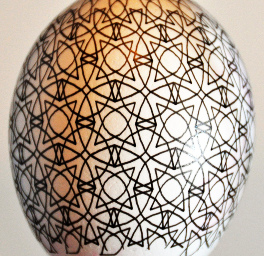

With the modified Inkscape running, any drawing can be reproduced on your chosen object. The illustration shows a bitmap, but the original pattern is a svg vector file. These can also be generated algorithmically from your choice of geometric functions. The other two photos show this regarded as a cylindrical object, and then 'wrapped' onto an egg.

- -

- -

"C:\IM>convert +matte -resize 260x170 *.jpg animn.gif"

and I use it for all the cropping of images.

You may want to visit my earlier pages at svgGen and 360 degree camera. I have many other Liberty BASIC pages indexed at Diga Me!