The 'web holds good example code, and my investigations were helped by bluatigro's work translating Python/LB.

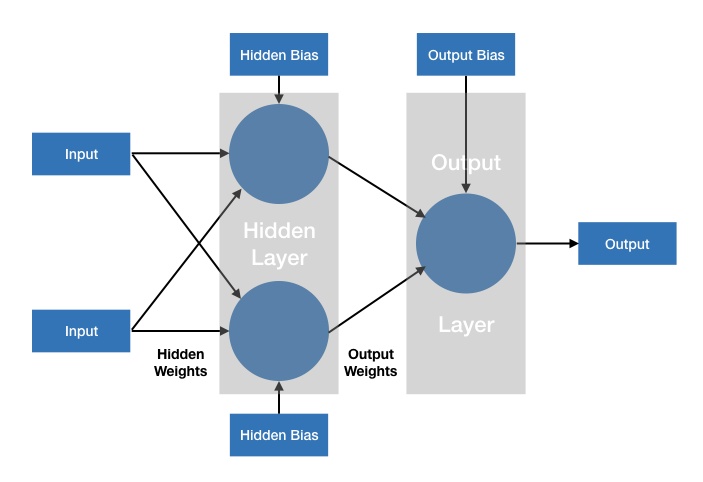

This shows two inputs and one output

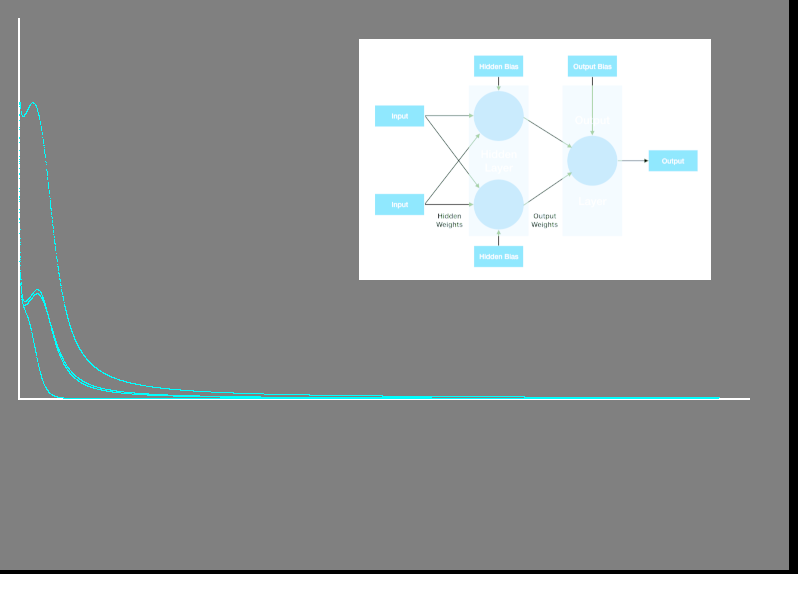

THe code below is one of many versions. It follows the learning process as it stabilises as shown graphically. Other versions can take the learned values of biasses and weightingsand 'hard wire' them into the code as data, making a single-purpose neural network that 'knows' how to do AND, XOR or whatever.

nomainwin

WindowWidth =800

WindowHeight =600

open "Neural Network" for graphics_nsb as #wg

#wg "trapclose quit"

loadbmp "scr", "networkDiag.bmp"

#wg "fill darkgray ; drawbmp scr 360 40"

#wg "color white ; size 2"

#wg "goto 20 20 ; down ; goto 20 400 ; down ; goto 750 400 ; size 1 ; color cyan"

lr = 0.1 ' learning rate

numInputs = 2

numHiddenNodes = 2

numOutputs = 1

dim hiddenLayer( numHiddenNodes -1)

dim outputLayer( numOutputs -1) ' <<< was -1)

dim hiddenLayerBias( numHiddenNodes -1)

dim outputLayerBias( numOutputs -1) ' <<< was -1)

dim hiddenWeights( numInputs -1, numHiddenNodes -1)

dim outputWeights( numHiddenNodes -1, numOutputs -1) ' <<< was -1)

'' create random values for hidden weights and bias

for i =0 to numHiddenNodes -1

for j =0 to numInputs -1

hiddenWeights( j, i) =2 *rnd( 0) -1

next j

hiddenLayerBias( i) =2 *rnd( 0) -1

next i

for i =0 to numOutputs -1

for j =0 to numHiddenNodes -1

outputWeights( j, i) =2 *rnd( 0) -1

next j

outputLayerBias( i) =2 * rnd( 0) -1

next i

''training set inputs and outputs

numTrainingSets = 4

dim trainingInputs( numTrainingSets -1, numInputs -1)

for i = 0 to numTrainingSets -1

for j = 0 to numInputs -1

read a

trainingInputs( i , j ) =a ' <<<< missing =a

next j

next i

data 0.0, 0.0

data 1.0, 0.0

data 0.0, 1.0

data 1.0, 1.0

dim trainingOutputs( numTrainingSets -1, numOutputs -1)

for i = 0 to numTrainingSets -1

for j = 0 to numOutputs -1

read a

trainingOutputs( i, j) =a ' missing '=a'

next j

next i

data 0.0

data 0.0

data 0.0

data 1.0

'' Iterate through the entire training for a number of epochs

dim deltaHidden( numHiddenNodes -1)

dim deltaOutput( numOutputs -1) ' <<< was -1)

dim trainingSetOrder( numTrainingSets -1)

for i = 0 to numTrainingSets - 1

trainingSetOrder( i ) = i

next i

for n =0 to 2.8e4 '2e4

scan

'' As per SGD, shuffle the order of the training set

for i =3 to 1 step -1

r =int( rnd( 0) *( i +1))

temp =trainingSetOrder( i)

trainingSetOrder( i) =trainingSetOrder( r)

trainingSetOrder( r) =temp

next

'print trainingSetOrder( 0); " "; trainingSetOrder( 1); " "; trainingSetOrder( 2); " "; trainingSetOrder( 3)

'' Cycle through each of the training set elements

for x =0 to numTrainingSets -1

i = trainingSetOrder( x)

'' Compute hidden layer activation

for j =0 to numHiddenNodes -1

activation = hiddenLayerBias( j)

for k =0 to numInputs -1

activation = activation + trainingInputs( i, k) * hiddenWeights( k, j )

next k

hiddenLayer( j) = sigmoid( activation)

next j

'' Compute output layer activation

for j =0 to numOutputs -1

activation = outputLayerBias( j)

for k =0 to numHiddenNodes -1 '<<<<<<< -k<

activation = activation + hiddenLayer( k) * outputWeights( k, j)

next k

outputLayer( j) = sigmoid( activation)

next j

'' Compute change in output weights

for j =0 to numOutputs-1

dError = ( trainingOutputs( i, j) - outputLayer( j))

deltaOutput( j) = dError * dsigmoid( outputLayer( j))

next j

'' Compute change in hidden weights

for j =0 to numHiddenNodes -1

dError = 0.0

for k =0 to numOutputs -1

dError = dError + deltaOutput( k) * outputWeights( j, k)

next k

deltaHidden( j) = dError * dsigmoid( hiddenLayer( j))

next j

'' Apply change in output weights

for j =0 to numOutputs -1

outputLayerBias( j) = outputLayerBias( j) + deltaOutput( j) * lr

for k =0 to numHiddenNodes -1

outputWeights( k, j) = outputWeights( k, j) + hiddenLayer( k) * deltaOutput( j) * lr

next k

next j

'' Apply change in hidden weights

for j =0 to numHiddenNodes -1

hiddenLayerBias( j) = hiddenLayerBias( j) + deltaHidden( j) * lr

for k =0 to numInputs- 1

hiddenWeights( k, j) = hiddenWeights( k, j) + trainingInputs( i, k) * deltaHidden( j) * lr

next k

next j

next x '<<<<<<<

fout = 0.0

for i = 0 to numOutputs -1

fout = fout +abs( deltaOutput( i ) ) ' <<<<<<< no += yet in LB

next i

#wg "set "; 20 +int( n /2.4e4 *600); " "; int( 400 -2000 *fout)

if n mod 10 = 0 then

'print n , using( "##.####", fout)

end if

next n

#wg "getbmp scr 1 1 800 600"

filedialog "Save as", "*.bmp", fn$

if fn$ <>"" then bmpsave "scr", fn$

'print n, fout

'print "[ end game ]"

wait

end

function sigmoid( x) ' <<<<<<<

sigmoid = 1 / ( 1 + exp( 0 -x ) ) ' LB's lack of unitary minus

end function

function dsigmoid( x) ' <<<<<<<

dsigmoid = x * ( 1 - x )

end function

sub quit h$

close #wg

end

end sub

The second one uses pre-calculated values to implement an AND gate

'Saved output from training on digital AND gate data

'Hidden layer- ____weights______ and bias.

' -3.629 -3.907 5.097

' -2.571 -2.194 2.766

'Output layer- ____weights______ and bias.

' -8.039 -4.602 4.845

'Compute hidden layer activation =bias +sumOf( inputs *weights)

numInputs = 2

numHiddenNodes = 2

numOutputs = 1

dim trainingInputs( 0, 1)

trainingInputs( 0, 0) =0

trainingInputs( 0, 1) =0

dim hiddenLayer( 1)

dim hiddenLayerBias( 1)

hiddenLayerBias( 0) =5.097

hiddenLayerBias( 1) =2.766

dim outputLayerBias( 0)

outputLayerBias( 0) =4.845

dim hiddenWeights( 1, 1)

hiddenWeights( 0, 0) =0 -3.629

hiddenWeights( 0, 1) =0 -3.907

hiddenWeights( 1, 0) =0 -2.571

hiddenWeights( 1, 1) =0 -2.194

dim outputWeights( 1, 0)

outputWeights( 0, 0) =0 -8.039

outputWeights( 1, 0) =0 -4.602

dim outputLayer( 0)

for test =1 to 50 ' check generates near-correct values for AND gate

trainingInputs( 0, 0) =int( 2 *rnd( 1))

trainingInputs( 0, 1) =int( 2 *rnd( 1))

for j =0 to 1

activation = hiddenLayerBias( j)

for k =0 to 1

activation =activation +trainingInputs( i, k) *hiddenWeights( k, j )

next k

' calc resulting neuron output

hiddenLayer( j) = sigmoid( activation)

next j

'' Compute output layer activation

for j =0 to 0

activation = outputLayerBias( j)

for k =0 to 1

activation = activation + hiddenLayer( k) * outputWeights( k, j)

next k

' calc resulting neuron output

outputLayer( j) = sigmoid( activation)

next j

print trainingInputs( 0, 0); " "; trainingInputs( 0, 1); " => "; using( "##.###", outputLayer( 0)); " ";

if outputLayer( 0) <0.1 then print "low"

if outputLayer( 0) >0.9 then print "high"

if outputLayer( 0) >=0.1 and outputLayer( 0) <=0.9 then print "WHOOPS!"

next test

wait

function sigmoid( x) ' <<<<<<<

sigmoid = 1 /( 1 +exp( 0 -x ) ) ' LB's lack of unitary minus

end function

function dsigmoid( x) ' <<<<<<<

dsigmoid = x *( 1 -x )

end function